How to benchmark your software¶

Benchmarking in software development, means measuring the performance of a piece of code, usually to find out how much time it takes to run. Software benchmarking is notoriously difficult to do accurately and well, and in Beautiful Canoe we rarely need to produce accurate benchmarks of the sort that you might find in an academic paper.

However, we do sometimes need to measure our software, and this document describes some of the pitfalls that you might encounter when doing this.

Benchmarking guidelines¶

If you want to find out how long a piece of software takes to run, you should never time it only once, and certainly never on a busy development machine.

The standard Beautiful Canoe benchmarking harness is multitime by Laurie Tratt which automates repeated benchmarking and provides output like this:

===> multitime results

1: awk "function fib(n) { return n <= 1 ? 1 : fib(n - 1) + fib(n - 2) } BEGIN { fib(30) }"

Mean Std.Dev. Min Median Max

real 0.338+/-0.0096 0.031 0.290 0.331 0.403

cpu 0.337+/-0.0094 0.031 0.290 0.345 0.361

user 0.336+/-0.0093 0.030 0.290 0.331 0.401

sys 0.001+/-0.0001 0.003 0.000 0.000 0.008

$

This lists the real (i.e. wallclock time) performance of the software under test (SUT), the time spent with the operating system in user and system modes, and the cputime taken.

The numbers that we are usually interested in here are the mean wallclock time, and either the confidence interval or the standard deviation, both of which measure the variability of the performance.

In the example above, the mean wallclock time is 0.338 secs, its confidence interval is +/-0.0096 and the standard deviation is 0.031.

If you are using a version of multitime that allows you to set the confidence interval that is written out, you should use -c 99 -- i.e. a 99% confidence interval.

You should also use as many iterations as you can -- never less than 5 and ideally 30 or more.

Benchmarking JIT-ed languages¶

Languages that are usually interpreted rather than compiled (Java, C#, etc.) are often implemented with just-in-time (JIT) interpreters. These interpreters measure the performance of the code they are running and look for hot loops -- code that is run repeatedly, and might cause a bottleneck. This is called the warm-up phase of the program execution. The interpreters then compile hot loops down to machine code, which is intended to speed the program up.

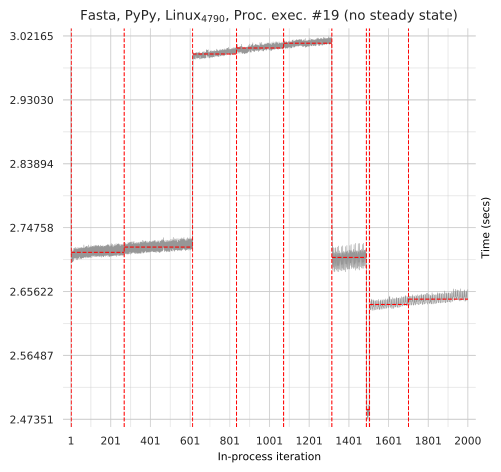

You would expect then, that if you benchmark a simple loop in a JIT-ed interpreter, that it would run slowly for a while, and then subsequent iterations would be much faster. In fact, the paper Virtual machine performance blows hot and cold found that this doesn't always happen. In fact, not only do some simple iterated benchmarks not improve their performance, some do not reach a steady state at all:

If you need to benchmark code in a JIT-ed interpreter, you should be aware of these performance issues. You may well want to run your software under test in a loop, for a large number of iterations, but do not be tempted to drop the first few warm-up iterations from your dataset, just in case the JIT does not optimise your code well.

The benchmarking environment¶

For a benchmark to give an accurate measure of how much time a piece of software takes to perform a computation (as opposed to an accurate measure of the performance a user might experience), the software under test would ideally be the only code running. In reality, it is impossible to remove all of the measurement noise that is created by the firmware, hardware and operating system of the machine that you are using.

If you are benchmarking on your own machine, you should at least close heavyweight applications such as web browsers, Slack, etc. and be aware that your results will not be reliable. If you are benchmarking on one of our Beautiful Canoe servers, you should be aware that other pipelines and deployments may well be running on the same server, which will create noise.

Using multitime will help to account for some of the noise, and you should certainly never take only one measurement on a noisy machine.

If you need to obtain a slightly more reliable measurement, you should arrange for your benchmarks to be scheduled at a time when the server is likely to be as quiet as possible. This will usually mean in the early hours of the morning, when Beautiful Canoe staff are not working.

When to use the devops/benchmarking service¶

Beautiful Canoe maintains a benchmarks repository which holds the results of scheduled benchmarks and displays them on a website. This service is intended to be used for regular, periodic benchmarking, where we want to track the performance of a piece of code over time to ensure we don't have any performance regressions.

If you need to do this, the README describes how to set the service up in your own repository to generate performance-per-commit charts.

Your benchmarks should run as scheduled pipelines in your repository, and you should take care to avoid scheduling your pipeline jobs when they might clash with cron.daily jobs on the same server.

On Ubuntu 18.04 the default crontab is set to:

# m h dom mon dow user command

17 * * * * root cd / && run-parts --report /etc/cron.hourly

25 6 * * * root test -x /usr/sbin/anacron || ( cd / && run-parts --report /etc/cron.daily )

47 6 * * 7 root test -x /usr/sbin/anacron || ( cd / && run-parts --report /etc/cron.weekly )

52 6 1 * * root test -x /usr/sbin/anacron || ( cd / && run-parts --report /etc/cron.monthly )

You will occasionally also want to compare the performance of a feature branch to a long-running branch (main or develop), to check that a change does not introduce a regression.

In this case, we suggest that you have a separate CI job to manually run your benchmarks on feature-branches, and then compare them to the performance you see on the charts here.

In general, information from feature branches should not be stored outside of the repository where the branch was created, as we want to encourage developers to merge quickly and regularly.